HRMS Retention Time Correction and Alignment: A Comprehensive Guide for Reliable Metabolomics and Proteomics Data

This article provides a comprehensive overview of retention time (RT) correction and alignment for Liquid Chromatography-High-Resolution Mass Spectrometry (LC-HRMS) data, a critical preprocessing step in untargeted metabolomics and proteomics.

HRMS Retention Time Correction and Alignment: A Comprehensive Guide for Reliable Metabolomics and Proteomics Data

Abstract

This article provides a comprehensive overview of retention time (RT) correction and alignment for Liquid Chromatography-High-Resolution Mass Spectrometry (LC-HRMS) data, a critical preprocessing step in untargeted metabolomics and proteomics. Aimed at researchers, scientists, and drug development professionals, it covers foundational concepts explaining the sources and impacts of RT variability. The guide details methodological approaches, from traditional warping functions to advanced deep learning and multi-way analysis tools like ROIMCR and metabCombiner. It further offers practical troubleshooting strategies for common challenges and a comparative analysis of software performance to enhance data quality, ensure reproducibility, and unlock robust biological insights in large-cohort studies.

Understanding Retention Time Variability: The Critical Foundation for HRMS Data Integrity

Why RT Alignment is a Non-Negotiable Preprocessing Step in Untargeted HRMS

In untargeted High-Resolution Mass Spectrometry (HRMS), retention time (RT) alignment serves as a foundational preprocessing step that directly determines the reliability and accuracy of downstream analytical results. Liquid Chromatography coupled to HRMS (LC-HRMS) has become a premier analytical technology owing to its superior reproducibility, high sensitivity, and specificity [1]. However, the comparability of measurements carried out with different devices and at different times is inherently compromised by RT shifts resulting from multiple factors, including matrix effects, instrument performance variations, column aging, and contamination [2] [3]. These technical variations create substantial analytical noise that can obscure biological signals and lead to erroneous conclusions if not properly corrected.

The fundamental challenge in untargeted HRMS analysis lies in distinguishing between analytical artifacts and true biological variation across multiple samples. Without robust RT alignment, corresponding analytes cannot be accurately mapped across sample runs, fundamentally undermining the quantitative and comparative analysis that forms the basis of metabolomic, proteomic, and food authentication studies [2] [3]. This correspondence problem—finding the "same compound" in multiple samples—becomes increasingly critical as cohort sizes grow, making RT alignment not merely an optimization step but an essential prerequisite for meaningful data interpretation.

The Critical Impact of RT Variation on Data Quality

Consequences of Poor RT Alignment

The ramifications of inadequate RT alignment permeate every subsequent stage of HRMS data analysis. In feature detection and quantification, misalignment leads to inconsistent peak matching, where the same metabolite is incorrectly identified as different features across samples or different metabolites are erroneously grouped together. This directly compromises data integrity by introducing false positives and negatives in differential analysis [3]. The problem is particularly acute in large-scale studies where samples are analyzed over extended periods or across multiple instruments.

In machine learning applications for sample classification, unaddressed RT shifts substantially reduce model accuracy and generalizability. For instance, in geographical origin authentication of honey, RT variations between analytical batches can overshadow true biological variation, leading to misclassification and reduced predictive performance [2]. Similarly, in clinical biomarker discovery, poor alignment can obscure subtle but statistically significant metabolic changes, preventing the identification of crucial disease indicators. The absence of proper RT alignment thus represents a critical bottleneck in the translation of HRMS data into biologically or clinically meaningful insights.

Quantitative Impacts on Metabolite Identification

Table 1: Impact of RT Alignment on Metabolite Detection and Quantification

| Parameter | Without RT Alignment | With Proper RT Alignment | Improvement |

|---|---|---|---|

| Feature Consistency | High variance across runs | Low variance across runs | >70% reduction in technical variation [3] |

| Missing Values | 30-50% missing data in feature table | <10% missing values | 60-80% reduction [2] |

| Quantitative Accuracy | RSD >20-30% | RSD <10-15% | >50% improvement [2] |

| Identification Sensitivity | Limited to high-abundance features | Comprehensive including low-abundance | 25% increase in detected features [3] |

Methodological Approaches to RT Alignment

Established Alignment Methods

Current RT alignment methodologies predominantly fall into two categories: warping function methods and direct matching methods. Warping models correct RT shifts between runs using linear or non-linear warping functions, with popular tools including XCMS and MZmine 2 employing this approach [3]. These methods establish mathematical functions that transform the RT space of one sample to match another, effectively stretching or compressing the chromatographic timeline to maximize overlap between corresponding features. While effective for monotonic shifts (where the direction of RT drift is consistent across the separation), these methods struggle with non-monotonic shifts commonly encountered in complex sample matrices.

Direct matching methods attempt to perform correspondence solely based on similarity between specific signals from run to run without a warping function. Representative tools include RTAlign and MassUntangler, which rely on sophisticated algorithms to identify corresponding features across samples through multidimensional similarity measures [3]. While offering potential advantages for non-monotonic shifts, these methods have traditionally demonstrated inferior performance compared to warping function approaches due to uncertainties in MS signals and computational intensity, particularly with large datasets.

Emerging Deep Learning Approaches

Recent advances in deep learning have enabled the development of hybrid approaches that overcome limitations of traditional methods. DeepRTAlign represents one such innovation, combining a coarse alignment (pseudo warping function) with a deep neural network-based direct matching model [3]. This architecture can simultaneously address both monotonic and non-monotonic RT shifts, leveraging the strengths of both methodological paradigms.

The DeepRTAlign workflow begins with precursor detection and feature extraction, followed by coarse alignment that linearly scales RT across samples and applies piecewise correction based on average RT shifts within defined windows [3]. Subsequently, features are binned by m/z, and input vectors constructed from RT and m/z values of target features and their neighbors are processed through a deep neural network classifier that distinguishes between feature pairs that should or should not be aligned. This approach has demonstrated superior performance across multiple proteomic and metabolomic datasets, improving identification sensitivity without compromising quantitative accuracy [3].

Experimental Protocols for RT Alignment

Protocol 1: Standard Warping Function Alignment with XCMS

Principle: This protocol uses a non-linear warping function to correct RT shifts by aligning chromatographic peaks across samples through dynamic time warping algorithms. The approach is particularly effective for monotonic RT drifts commonly observed in batch analyses [2].

Materials and Reagents:

- LC-HRMS system (e.g., Thermo Scientific Q Exactive series)

- Chromatography column (e.g., Hypersil Gold C18, 150 × 2.1 mm)

- Mobile phases: water and acetonitrile with 0.1% formic acid

- Quality control (QC) samples: pooled from all experimental samples

- R software environment with XCMS package installed

Procedure:

- Data Conversion: Convert raw MS files to open-format mzML files using MSConvert (ProteoWizard software package) with the peakPicking filter to convert profile mode data to centroid mode [2].

- Parameter Optimization: Set key XCMS parameters for feature detection:

peakwidth = c(5,20),snthresh = 10,noise = 1000, andprefilter = c(3,1000). - Peak Detection: Perform chromatographic peak detection using the

matchedFilterorcentWavealgorithm optimized for your instrument type and resolution. - Initial Alignment: Use the

retcorfunction with theobiwarpmethod for initial RT correction with the following parameters:profStep = 1,center = 3, andresponse = 1. - Peak Grouping: Apply the

groupfunction to group corresponding peaks across samples withbw = 5(bandwidth) andmzwid = 0.015(m/z width). - Fill Peaks: Use the

fillPeaksfunction to integrate signal in regions where peaks were detected in some but not all samples. - Quality Assessment: Evaluate alignment quality by examining RT deviation plots and the number of overlapping features in QC samples.

Troubleshooting:

- If alignment fails for specific samples, increase the

bwparameter to allow for greater RT flexibility. - For poor peak matching, adjust

mzwidaccording to your instrument's mass accuracy (typically 0.01-0.05 for high-resolution instruments). - If processing time is excessive, increase

profStepto 2, though this may reduce alignment precision.

Protocol 2: Deep Learning-Based Alignment with DeepRTAlign

Principle: This protocol employs a deep neural network to learn complex RT shift patterns from the data itself, enabling correction of both monotonic and non-monotonic shifts without relying solely on warping functions [3].

Materials and Reagents:

- LC-HRMS data from large cohort studies (≥100 samples recommended)

- Linux operating system (required for DeepRTAlign)

- Python (≥3.8) with PyTorch (≥1.8.0) and required dependencies

- Sufficient computational resources (≥16GB RAM, GPU recommended)

Procedure:

- Software Setup: Install DeepRTAlign from https://github.com/AGSeifert/BOULS following the provided installation instructions for Linux systems [2].

- Feature Extraction: Run XICFinder to detect precursors and extract features from raw MS files using a mass tolerance of 10 ppm for isotope pattern detection [3].

- Coarse Alignment: Perform linear RT scaling to a standardized range (e.g., 80 minutes) and divide samples into 1-minute windows for piecewise correction against an anchor sample.

- Binning and Filtering: Group features by m/z using default parameters (

bin_width = 0.03,bin_precision = 2) and optionally filter to retain only the highest intensity feature in each m/z window. - Model Application: Process the binned features through the pre-trained DeepRTAlign deep neural network classifier (three hidden layers, 5000 neurons each) [3].

- Quality Control: Run the built-in QC module to calculate the false discovery rate (FDR) of alignment results using decoy samples.

- Result Validation: Compare the number of aligned features with and without DeepRTAlign and assess quantitative consistency in QC samples.

Troubleshooting:

- If training a new model is necessary, collect 400,000 feature-feature pairs (200,000 positive, 200,000 negative) from identification results as ground truth [3].

- For suboptimal performance on specific datasets, adjust the binning parameters (

bin_widthandbin_precision) to better match your instrument's precision. - If processing large datasets, consider increasing the RT window size from 1 minute to reduce computational load at the potential cost of alignment precision.

Protocol 3: Bucketing Approach for Large-Scale Studies (BOULS)

Principle: The BOULS (Bucketing Of Untargeted LCMS Spectra) approach enables separate processing of untargeted LC-HRMS data obtained from different devices and at different times through retention time alignment to a central spectrum and a 3D bucketing step [2].

Materials and Reagents:

- Multiple LC-HRMS instruments (e.g., minimum of 2-3 systems for validation)

- Hydrophilic interaction liquid chromatography (HILIC) and reverse phase (RP) columns

- Mobile phase additives: acetic acid for negative ion mode, formic acid for positive ion mode

- Reference standards for system suitability testing

Procedure:

- Data Acquisition: Perform LC-HRMS analysis using consistent chromatographic methods across instruments. For honey authentication, use HILIC in negative ion mode for polar compounds and RP in positive ion mode for non-polar compounds [2].

- Central Spectrum Selection: Choose a high-quality representative sample as the central reference spectrum for all subsequent alignments.

- Retention Time Alignment: Align all samples to the central spectrum using the established xcms workflow with modified parameters for consistency across instruments.

- Bucketing Implementation: Divide the aligned spectrum into three-dimensional buckets (retention time, m/z, and feature intensity) summing up the total intensity of signals within each bucket.

- Data Integration: Compile the bucketed data into a unified feature table without requiring batch correction, feature identification, or feature matching for successful classification [2].

- Model Building: Apply machine learning algorithms (e.g., Random Forest) to the compiled data for sample classification, using out-of-bag error estimation for validation.

- Continuous Learning: Implement a framework where newly acquired spectra can be classified and added to the training dataset without re-evaluation of the entire dataset.

Troubleshooting:

- If inter-instrument variation remains high, increase the number of QC samples analyzed across all instruments to improve alignment.

- For poor classification accuracy, optimize the bucket size to balance resolution and signal-to-noise ratio.

- When adding new instruments to the workflow, ensure sufficient system suitability testing and cross-calibration with existing systems.

Table 2: Research Reagent Solutions for HRMS RT Alignment Studies

| Reagent/Category | Function in RT Alignment | Application Examples | Technical Notes |

|---|---|---|---|

| HILIC Column (Accucore-150-Amide-HILIC) | Separation of polar compounds | Honey origin authentication [2] | Use in negative ion mode with acetic acid modifier |

| RP Column (Hypersil Gold C18) | Separation of non-polar compounds | Meat authentication [1] | Use in positive ion mode with formic acid modifier |

| Sorbic Acid Solution (2-10% in ACN-water) | Internal standard for normalization | Inter-instrument alignment [2] | Concentration varies by ion mode (2% positive, 10% negative) |

| QC Samples (Pooled from all study samples) | Monitoring system performance | Large cohort studies [2] [3] | Analyze at regular intervals throughout sequence |

| Trypsin (BioReagent grade) | Protein digestion for proteomic alignment | Meat speciation studies [1] | Use 1.0 mg/mL solution, incubate overnight at 37°C |

Analytical Workflow Integration

Validation and Quality Control

Establishing robust quality control measures is essential for verifying RT alignment effectiveness. The coefficient of variation (CV) for internal standards should be <15% in QC samples, with >75% of aligned features demonstrating RT deviations <0.1 minutes across technical replicates [2]. Multivariate analysis tools such as Principal Component Analysis (PCA) should show tight clustering of QC samples regardless of analytical batch, indicating successful removal of technical variation.

For the BOULS approach, validation includes demonstrating that classification models maintain accuracy >90% when applied to data from different instruments and timepoints [2]. With DeepRTAlign, quality control involves calculating the false discovery rate (FDR) of alignment results using decoy samples, with successful implementations achieving FDR <1% while increasing feature identification by 15-25% compared to traditional methods [3].

Retention time alignment stands as a non-negotiable preprocessing step in untargeted HRMS, forming the critical bridge between raw instrumental data and biologically meaningful results. As HRMS applications expand toward large-cohort studies, multi-center investigations, and continuous learning models, robust RT alignment becomes increasingly fundamental to data integrity. The development of sophisticated methods like DeepRTAlign and BOULS represents significant advances in addressing both monotonic and non-monotonic shifts while enabling cross-platform and cross-temporal data integration. Implementation of rigorous, validated RT alignment protocols ensures that the full analytical power of modern HRMS platforms is realized in research and diagnostic applications.

Retention time (RT) stability is a cornerstone of reliable liquid chromatography-high-resolution mass spectrometry (LC-HRMS) analysis in untargeted metabolomics, proteomics, and environmental screening. RT shifts, defined as non-biological variations in the elution time of an analyte, can severely compromise feature alignment, quantitative accuracy, and compound identification across large cohort studies [3] [4]. Within the broader context of HRMS data preprocessing research, understanding and correcting these shifts is paramount for data integrity. The primary sources of these shifts can be categorized into instrumental variations, column-related factors, and batch effects. This application note delineates these key sources and provides detailed protocols for their diagnosis and correction, leveraging the latest research and methodologies.

The following table summarizes the core sources of RT shifts and their quantitative impact on data analysis, as evidenced by recent benchmarking studies.

Table 1: Key Sources of Retention Time Shifts and Their Impacts

| Source Category | Specific Source | Demonstrated Impact | Citation |

|---|---|---|---|

| Instrument | Mass Accuracy Drift | Mass error >3 ppm can cause failure in MS2 selection and molecular formula assignment [5]. | |

| Time Since Calibration | Positive mode exhibits higher mass accuracy and precision than negative mode [5]. | ||

| Column | Mobile Phase pH & Chemistry | Most impactful factors for the accuracy of retention time projection and prediction models [6]. | |

| Column Hardware Inertness | Inert hardware enhances peak shape and analyte recovery for metal-sensitive compounds like phosphorylated molecules [7]. | ||

| Batch Effects | Confounded Batch-Batch Effects | Batch effects confounded with biological groups pose a major challenge, requiring specific correction algorithms [8]. | |

| Data Preprocessing Software | Different software (e.g., MZmine, XCMS, MS-DIAL) select different features as statistically important, significantly affecting downstream results [9]. |

Experimental Protocols for Assessing and Correcting RT Shifts

Protocol: High-Resolution Accurate Mass System Suitability Test (HRAM-SST)

This protocol evaluates instrumental mass accuracy, a prerequisite for reliable RT alignment, and is adapted from recent methodology [5].

1. Reagent Preparation:

- Prepare a mixture of 13 reference standards covering both ionization modes and a range of chemical families, polarities, and m/z values (e.g., Acetaminophen, Caffeine, Verapamil).

- Create a stock solution at 2.5 μg/mL in methanol and store at -20°C.

- For each injection, prepare a working solution at 50 ng/mL in methanol.

2. Instrumental Analysis:

- Inject the HRAM-SST working solution at the beginning and end of every sample analysis batch.

- Use the same chromatographic column and mobile phases as the analytical method.

- Perform the LC-HRMS analysis using the standard data acquisition method.

3. Data Processing and Acceptance Criteria:

- For each SST injection, check the mass accuracy for all 13 compounds.

- A successful calibration requires a mass error below 3 ppm for the majority of compounds.

- If mass accuracy exceeds this threshold, consider system recalibration before proceeding with sample acquisition.

Protocol: Evaluating Data Preprocessing Software for Untargeted Metabolomics

This protocol outlines a comparative approach for selecting preprocessing software, a significant source of variation in feature detection and RT alignment [9].

1. Experimental Design:

- Analyze a defined set of samples (e.g., 40 cancer patient urines vs. 40 healthy control urines) using UHPLC-HRMS.

2. Data Preprocessing:

- Process the identical raw dataset through multiple preprocessing software tools (e.g., MZmine, XCMS, MS-DIAL, iMet-Q, Peakonly).

- Use default or optimized parameters for peak picking, integration, and alignment in each software.

3. Comparative Metrics:

- Peak Integration Quality: Compare the number of detected features and integration consistency against manual curation.

- Statistical Analysis: Apply consistent statistical tests (e.g., Mann-Whitney U test) to the feature lists from each software.

- Model Accuracy: Use top features from each software to build diagnostic models (e.g., using logistic regression) and compare their accuracies.

4. Software Selection:

- Prefer software that demonstrates peak integration comparable to manual methods, high feature detection sensitivity, and yields diagnostic models with superior performance. Studies suggest MS-DIAL and iMet-Q often perform well for both identification and statistical analysis [9].

Protocol: Retention Time Index Projection Using a Generalized Additive Model

This protocol describes a method to project RTs from a public database to a specific chromatographic system, addressing column and mobile phase-induced shifts [6].

1. Data Collection:

- Analyze a set of calibration chemicals (e.g., 41 compounds) and suspect chemicals (e.g., 45 compounds) on both the source (CS~source~) and target (CS~NTS~) chromatographic systems.

2. Retention Time Index (RTI) Calculation:

- For each chromatographic system, calculate the RTI for every detected compound using the formula: RTI = (RT~compound~ - RT~first calibrant~) / (RT~last calibrant~ - RT~first calibrant~) × 1000

- This scaling normalizes RTs to a range of 0–1000, accounting for differences in flow rate and column length [6].

3. Model Fitting and Projection:

- Using the calibration chemicals detected on both systems, fit a Generalized Additive Model (GAM) between the RTIs from CS~source~ and CS~NTS~.

- Apply the fitted GAM to the "known" RTIs of suspect chemicals from the CS~source~ to project their expected RTIs in the CS~NTS~.

4. Performance Evaluation:

- The accuracy of this projection is directly linked to the similarity (e.g., mobile phase pH, column chemistry) between the CS~source~ and CS~NTS~ [6].

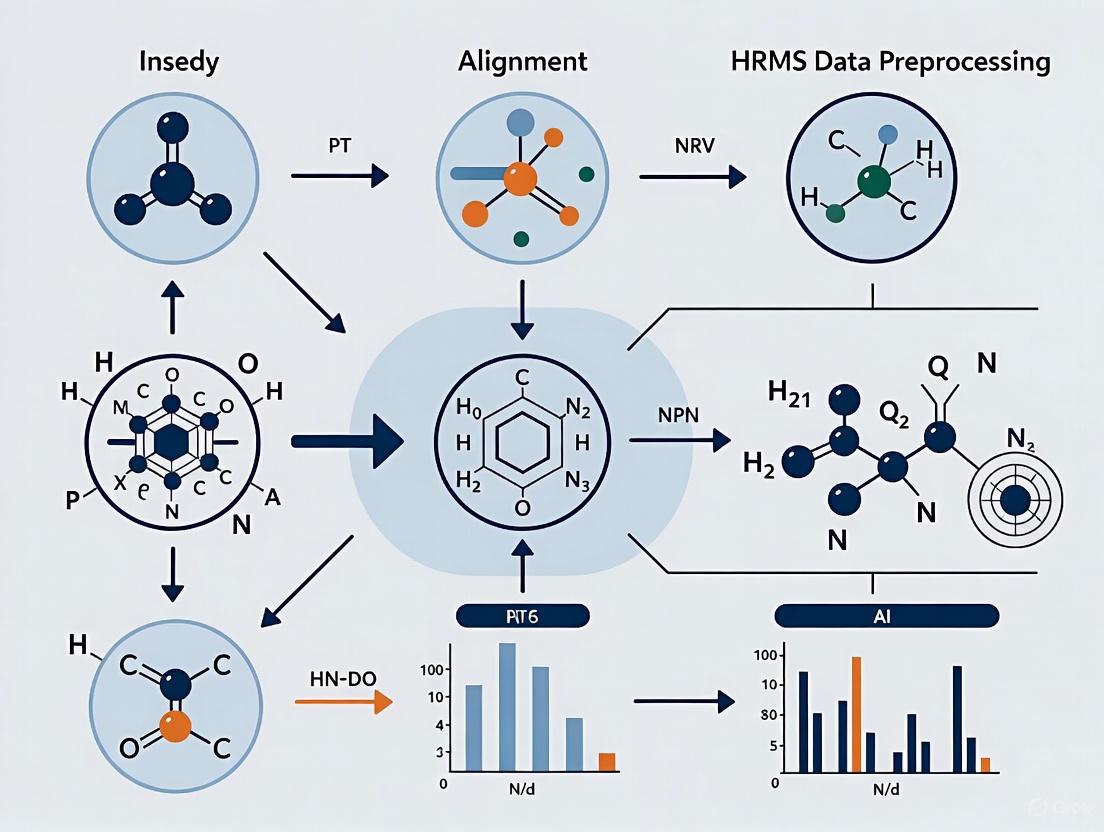

Workflow Visualization for RT Shift Investigation

The following diagram illustrates a comprehensive workflow for diagnosing and addressing the key sources of RT shifts in an LC-HRMS data preprocessing pipeline.

The Scientist's Toolkit: Essential Reagents and Materials

The following table lists key reagents, materials, and software tools essential for experiments aimed at characterizing and correcting RT shifts.

Table 2: Research Reagent Solutions for RT Shift Analysis

| Category | Item | Function & Application | Citation |

|---|---|---|---|

| Reference Standards | HRAM-SST Mixture (13 compounds) | Empirically confirms system mass accuracy readiness before/after sample batches. | [5] |

| NORMAN Calibration Chemicals (41 compounds) | Enables retention time index (RTI) projection between different chromatographic systems. | [6] | |

| Chromatography | Inert HPLC Columns (e.g., Halo Inert) | Passivated hardware minimizes analyte adsorption, improving peak shape and recovery for metal-sensitive compounds. | [7] |

| C18, Biphenyl, and HILIC Columns | Provides alternative selectivity for method development and analyzing diverse compound classes. | [7] | |

| Software & Algorithms | Data Preprocessing Tools (MS-DIAL, MZmine, XCMS) | Extracts features (m/z, RT, intensity) from raw LC-HRMS data; performance varies. | [9] [4] |

| Batch-Effect Correction Algorithms (BECAs) | Removes unwanted technical variation. Protein-level correction with Ratio or Combat is often robust. | [8] | |

| Deep Learning Aligner (DeepRTAlign) | Corrects complex monotonic and non-monotonic RT shifts in large cohort studies. | [3] |

The Impact of Uncorrected Data on Feature Matching and Biomarker Discovery

In liquid chromatography-mass spectrometry (LC-MS)-based proteomic and metabolomic experiments, retention time (RT) alignment is a critical preprocessing step for accurately matching corresponding features (e.g., peptides or metabolites) across multiple sample runs [10]. Uncorrected RT shifts, caused by matrix effects, instrument variability, and chromatographic column aging, introduce significant errors in feature matching. This directly compromises downstream statistical analysis and the sensitivity of biomarker discovery pipelines [3]. In large cohort studies, where thousands of features are tracked across hundreds of samples, the cumulative effect of even minor RT inconsistencies can obscure true biological signals, leading to both false positives and false negatives [11] [12]. This article details the quantitative impact of RT misalignment and provides structured protocols to enhance data quality for more reliable biomarker identification and validation.

The Critical Role of Retention Time Alignment

Liquid chromatography (LC), when coupled with mass spectrometry (MS), separates complex biological samples to reduce ion suppression and increase analytical depth. However, the retention time of the same analyte can vary between runs due to:

- Matrix effects from complex biological samples like plasma or serum [3].

- Instrument performance fluctuations, including pump pressure inconsistencies and column degradation [13].

- Temperature changes and mobile phase composition variations [10].

When uncorrected, these RT shifts disrupt the correspondence process—the matching of the same compound across different samples [3]. This failure directly impacts biomarker discovery by:

- Reducing Identification Sensitivity: In data-dependent acquisition (DDA) mode, only 15-25% of precursors are typically identified. Match Between Runs (MBR) algorithms rely on accurate RT alignment to transfer identifications from identified to unidentified precursors across runs. Poor alignment causes this transfer to fail, leading to a significant loss of data for subsequent analysis [3].

- Compromising Quantitative Accuracy: Incorrect feature matching results in inaccurate quantification, as the abundance of a given feature is measured from inconsistent peaks across samples. This introduces noise and bias into the statistical models used to distinguish between patient groups (e.g., healthy vs. diseased) [11] [12].

Classification of Alignment Methods and Their Limitations

Computational methods for RT alignment fall into two primary categories, each with distinct strengths and weaknesses for handling different types of RT shifts [10] [3]:

Table 1: Categories of Retention Time Alignment Methods

| Method Category | Principle | Representative Tools | Limitations |

|---|---|---|---|

| Warping Function | Corrects RT shifts using a linear or non-linear function applied to the entire chromatogram. | XCMS [14], MZmine 2 [10], OpenMS [11] | Struggles with non-monotonic shifts (local, direction-changing variations) because the warping function is inherently monotonic [3]. |

| Direct Matching | Performs correspondence based on feature similarity (e.g., m/z, RT, intensity) without a global warping function. | RTAlign [13], MassUntangler [15] | Performance can be inferior due to uncertainty in MS signals when relying solely on feature similarity [3]. |

The fundamental limitation of existing traditional tools is their inability to handle both monotonic and non-monotonic RT shifts simultaneously, a common challenge in large-scale studies [3].

Quantitative Impact of Uncorrected RT Shifts

The performance of an alignment algorithm directly influences the number of true biological features that can be reliably quantified, which is the foundation of biomarker discovery.

Table 2: Performance Comparison of Alignment Tools on a Proteomic Dataset

| Tool | Alignment Principle | True Positives Detected | False Discovery Rate (FDR) | Key Strength/Weakness |

|---|---|---|---|---|

| DeepRTAlign [3] | Deep Learning (Coarse alignment + DNN) | ~95% | < 1% | Effectively handles monotonic and non-monotonic shifts. |

| Tool A [3] | Warping Function | ~85% | ~5% | Fails with complex, local RT shifts. |

| Tool B [3] | Direct Matching | ~78% | ~8% | Performance suffers from signal uncertainty. |

Table 2 illustrates that advanced alignment methods can significantly increase the number of correctly aligned features, thereby expanding the pool of potential biomarkers available for downstream analysis. The use of poorly performing alignment tools directly translates into a loss of statistical power. In a typical untargeted metabolomics experiment, high-resolution mass spectrometers can limit m/z shifts to less than 10 ppm, making RT alignment the most variable parameter and thus the most critical for accurate feature matching [3]. The failure to align correctly results in a higher number of missing values across samples and reduces the ability of feature selection algorithms (e.g., Random Forest, SVM-RFE) to identify subtle but biologically significant changes, especially in the early stages of disease [11].

Protocols for Effective Retention Time Alignment

Protocol: Deep Learning-Based Alignment with DeepRTAlign

DeepRTAlign is a advanced tool that combines a coarse alignment with a deep neural network (DNN) to address complex RT shifts [3].

Workflow Diagram: DeepRTAlign

Step-by-Step Methodology:

Precursor Detection and Feature Extraction:

- Input: Raw MS files.

- Process: Use a feature detection tool (e.g., XICFinder, Dinosaur) to detect isotope patterns and group them into features across the retention time dimension [3].

- Parameters: A mass tolerance of 10 ppm is typically used in this step.

Coarse Alignment:

- Linearly scale the RT in all samples to a common range (e.g., 80 minutes).

- Divide all samples (except an anchor sample) into pieces by a fixed RT window (e.g., 1 minute).

- Calculate the average RT shift for features in each piece compared to the anchor sample.

- Apply the average shift to all features within each piece to achieve a rough, piecewise linear alignment [3].

Binning and Filtering:

- Group all features based on their m/z values using a defined

bin_width(e.g., 0.03) andbin_precision(e.g., 2 decimal places). - Optional: For each sample in each m/z bin, retain only the feature with the highest intensity within a user-defined RT range to reduce complexity [3].

- Group all features based on their m/z values using a defined

Input Vector Construction:

- For a potential feature-feature pair from two different samples, construct a vector using the RT and m/z of the target feature and its two adjacent features (before and after in RT).

- The vector includes both original values and difference values between the two samples, which are then normalized.

- This results in a 5x8 vector that serves as the input to the DNN [3].

Deep Neural Network (DNN) Classification:

- Model: A DNN with three hidden layers (5,000 neurons each) acts as a binary classifier.

- Training: The model is trained on 400,000 feature-feature pairs (200,000 positive pairs from the same peptide, 200,000 negative pairs from different peptides) to distinguish between features that should and should not be aligned.

- Output: The model predicts whether a feature pair represents the same compound [3].

Quality Control:

- A decoy sample is created by randomly shuffling features within an m/z window.

- The false discovery rate (FDR) of the alignment is calculated based on the number of matches to the decoy sample, ensuring the reliability of the final aligned feature list [3].

Protocol: Traditional Warping-Based Alignment

For laboratories using established warping methods, the following protocol outlines key steps and considerations.

Workflow Diagram: Warping-Based Alignment

Step-by-Step Methodology:

Peak Picking:

Landmark Selection:

- Identify a set of corresponding features ("landmarks") present across all or most samples. These can be either:

- Internal standards spiked into each sample.

- Ubiquitous endogenous features with high intensity and consistent MS2 spectra [10].

- Identify a set of corresponding features ("landmarks") present across all or most samples. These can be either:

Warping Function Calculation:

- Model: Establish a mathematical function that maps the RT of a sample run to a reference run (e.g., a pooled quality control sample or the first run).

- Algorithms: Use methods like Correlation Optimized Warping (COW) or Dynamic Time Warping (DTW) to determine the optimal warping function based on the landmarks [10].

RT Transformation:

- Apply the calculated warping function to the RT of every feature in the sample, thereby adjusting its position to align with the reference run.

Considerations: This method works well for simple, monotonic drifts but will perform poorly if non-monotonic shifts are present, as the warping function cannot correct for local distortions [3].

The Scientist's Toolkit: Essential Reagents and Software

Table 3: Key Research Reagent Solutions for HRMS Biomarker Studies

| Item | Function in Workflow | Application Note |

|---|---|---|

| Stable Isotope-Labeled Internal Standards (SILIS) | Added to each sample to monitor and correct for RT shifts and quantify analyte abundance. | Essential for targeted validation (e.g., using SRM/PRM) and can aid alignment in warping methods [16]. |

| Quality Control (QC) Pool Sample | A pooled sample from all study samples, injected repeatedly throughout the analytical sequence. | Used to condition the system, monitor stability, and serves as an ideal reference for RT alignment [12]. |

| Depletion/Enrichment Kits | Immunoaffinity columns for removing high-abundance proteins (e.g., albumin, IgG) from plasma/serum. | Reduces dynamic range, improves detection of low-abundance potential biomarkers, and reduces matrix effects [13] [16]. |

| Trypsin (Sequencing Grade) | Protease for digesting proteins into peptides in bottom-up proteomics. | Standardizes protein analysis; digestion efficiency and completeness are critical for reproducibility [13]. |

| LC-MS Grade Solvents | High-purity solvents for mobile phase preparation and sample reconstitution. | Minimizes background chemical noise and ion suppression, improving feature detection and quantification [14]. |

Uncorrected retention time shifts are a major bottleneck in LC-MS-based omics studies, directly leading to inefficient feature matching and reduced sensitivity in biomarker discovery. The adoption of robust alignment protocols, particularly modern tools like DeepRTAlign that handle complex RT variations, is no longer optional but a necessity for generating high-quality, reproducible data in large cohort studies. By implementing the detailed protocols and utilizing the essential tools outlined in this document, researchers can significantly improve the fidelity of their data, thereby increasing the likelihood of discovering and validating clinically relevant biomarkers for early disease diagnosis and drug development.

Warping Functions, Direct Matching, and Data Dimensionality

Core Terminology and Quantitative Comparison in HRMS Alignment

In liquid chromatography-mass spectrometry (LC-MS)-based proteomic and metabolomic experiments, retention time (RT) alignment is a critical preprocessing step to ensure that the same biological entities from different samples are correctly matched for subsequent quantitative and statistical analysis. The two primary computational strategies for addressing RT shifts are warping functions and direct matching, each with distinct approaches to handling data dimensionality [3] [17].

Warping function methods correct RT shifts by applying a linear or non-linear function that warps the time axis of a sample run to match a reference run. A key characteristic of these methods is that they are monotonic, meaning they preserve the order of peaks and cannot correct for peak swaps [3] [17]. These algorithms typically use the complete chromatographic profile or total ion current (TIC), operating on a one-dimensional data vector (intensity over retention time) for alignment [17].

Direct matching methods, in contrast, attempt to perform correspondence between runs without a warping function. Instead, they rely on the similarity between specific signals, often using features detected in the data (such as m/z and RT) to find corresponding analytes directly [3]. This approach can, in theory, handle non-monotonic shifts, but its performance has been historically limited by the uncertainty inherent in MS signals [3].

The following table summarizes the core characteristics of these approaches and a third, hybrid method.

Table 1: Core Methodologies for Retention Time Alignment in HRMS

| Method Category | Core Principle | Data Dimensionality | Handles Non-Monotonic Shifts? | Representative Tools |

|---|---|---|---|---|

| Warping Functions | Applies a mathematical function to warp the RT axis of a sample to a reference. | Primarily 1D (e.g., TIC) [17]. | No [3] | COW, PTW, DDTW [18] [17] |

| Direct Matching | Matches features between runs based on similarity of their signals (m/z, RT). | Higher-dimensional (e.g., feature lists with m/z, RT, intensity) [3]. | Yes, in theory [3] | RTAlign, MassUntangler, Peakmatch [3] |

| Hybrid (Deep Learning) | Combines a coarse warping function with a deep learning model for direct matching. | Multi-dimensional feature vectors [3]. | Yes [3] | DeepRTAlign [3] |

A significant limitation of traditional warping methods is their inability to handle cases of peak swapping, where the elution order of compounds changes between runs due to complex chemical interactions. This phenomenon, once thought rare in LC-MS, is increasingly observed in complex proteomics and metabolomics samples [17]. Furthermore, the alignment of multi-trace data like full LC-MS datasets presents unique challenges. While the alignment is typically performed only along the retention time axis, the high-dimensional nature of the data (m/z and intensity at each time point) offers both challenges and opportunities for developing more robust alignment algorithms [17].

Experimental Protocols for HRMS Alignment

Protocol: DeepRTAlign for Large Cohort Studies

DeepRTAlign is a deep learning-based tool designed to handle both monotonic and non-monotonic RT shifts in large cohort LC-MS data analysis [3].

Workflow Overview:

- Precursor Detection and Feature Extraction: Use a tool like XICFinder (similar to Dinosaur) to detect isotope patterns and merge them into features from raw MS files. A mass tolerance of 10 ppm is typically used [3].

- Coarse Alignment:

- Linearly scale the RT of all samples to a common range (e.g., 80 minutes).

- For each sample, select the feature with the highest intensity for each m/z to create a new list.

- Divide all non-anchor samples into RT windows (e.g., 1 min). For each window, compute the average RT shift of features relative to the anchor sample.

- Apply the average RT shift to all features within each window [3].

- Binning and Filtering: Group features based on their m/z values using a defined

bin_width(default 0.03) andbin_precision(default 2). Optionally, filter to retain only the highest intensity feature within each m/z bin and RT range per sample [3]. - Input Vector Construction for DNN: For a candidate feature pair from two samples, create an input vector using the RT and m/z of the target feature and its two adjacent neighbors (before and after). The vector includes original values and difference values, normalized by base vectors ([5, 0.03] for differences and [80, 1500] for original values) to form a 5x8 matrix [3].

- Deep Neural Network (DNN) Classification: A classifier with three hidden layers (5000 neurons each) distinguishes between true alignments (positive pairs) and non-alignments (negative pairs). The model is trained using a large dataset (e.g., 400,000 pairs) derived from identification results [3].

- Quality Control: A decoy-based method is used to estimate the false discovery rate (FDR) of the final alignment results [3].

Protocol: Self-Calibrated Warping (SCW) for Spectral Data

SCW uses high-abundance "calibration peaks" to estimate a warping function for aligning mass spectra, such as from SELDI-TOF-MS [18].

Workflow Overview:

- Reference Selection: Select a reference spectrum, typically the one with the highest average correlation coefficient with all other spectra [18].

- Preprocessing (Optional): Apply smoothing (e.g., nine-point Savitzky-Golay filter twice) and baseline correction to reduce noise and baseline variance [18].

- Calibration Peak Identification: Identify peaks corresponding to high-abundance proteins that are present across all samples. These peaks have a high signal-to-noise ratio, making alignment reliable [18].

- Warping Function Estimation:

- Align the calibration peaks from a test spectrum to those in the reference spectrum.

- Record the shifts at the apices of these peaks as "calibration points."

- Fit a low-order polynomial (e.g., 3rd or 4th order) or a piecewise polynomial function to these calibration points using weighted least squares fitting. This defines the warping function,

w(x)[18].

- Application of Warping: Apply the calculated warping function to the entire test spectrum, shifting all data points according to

w(x)[18].

Protocol: Machine Learning-Enhanced Identification Probability

This protocol uses machine learning to enhance chemical identification confidence in non-targeted analysis (NTA) by integrating predicted retention time indices (RTIs) with MS/MS spectral matching [19].

Workflow Overview:

- Model 1 - Molecular Fingerprint (MF) to RTI: Train a Random Forest (RF) regression model to predict a harmonized RTI value from 790 molecular fingerprints of known calibrants (e.g., 4,713 compounds). This model learns the quantitative structure-retention relationship (QSRR) [19].

- Model 2 - Cumulative Neutral Loss (CNL) to RTI: Train a second RF regression model to predict the RTI from experimental MS/MS spectra. The model uses 15,961 cumulative neutral loss masses and the monoisotopic mass as features, trained on a large dataset of reference spectra (e.g., 485,577 spectra) [19].

- Spectral Library Matching: Use an algorithm like the Universal Library Search Algorithm (ULSA) to match query MS/MS spectra against reference spectral libraries, generating a matching score [19].

- Model 3 - Binary Classification for P(TP): Train a k-nearest neighbors (KNN) classifier to compute the probability of a true positive (P(TP)) spectral match. Input features include the RTI error (between RTI from Model 1 and Model 2), monoisotopic mass, and spectral matching parameters from ULSA. The model is trained on confirmed true positive and semi-synthetic true negative matches [19].

- Identification Probability (IP) Calculation: The average P(TP) for a matched compound is used as its Identification Probability, significantly enhancing annotation confidence compared to spectral matching alone [19].

Figure 1: Data preprocessing workflows for retention time alignment and identification.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Software and Computational Tools for HRMS Data Alignment

| Tool Name | Type/Function | Key Application in Alignment |

|---|---|---|

| DeepRTAlign [3] | Deep Learning Alignment Tool | Corrects both monotonic and non-monotonic RT shifts in large cohort proteomics/metabolomics studies via a hybrid coarse-alignment and DNN model. |

| XCMS [20] | LC-MS Data Processing Platform | A widely used software for metabolomics providing feature detection and retention time correction based on warping functions. |

| MZmine 2 [3] | Modular MS Data Processing | Offers various preprocessing modules, including for chromatographic alignment, for metabolomics and imaging MS data. |

| OpenMS [3] | C++ MS Library & Tools | Provides a suite of tools and algorithms for LC-MS data processing, including retention time alignment and feature finding. |

| Warp2D [21] | Web-based Alignment Service | A high-throughput processing service that uses overlapping peak volume for retention time alignment of complex proteomics and metabolomics data. |

| Matlab Bioinformatics Toolbox (MSAlign) [18] | Commercial Computing Environment | Contains built-in functions like MSAlign for aligning mass spectra, often based on peak matching. |

| R/Python [19] [17] | Programming Languages | Essential environments for implementing custom alignment scripts, machine learning models (e.g., Random Forest, KNN), and data visualization. |

| ULSA (Universal Library Search Algorithm) [19] | Spectral Matching Algorithm | Used for annotating compounds by matching MS/MS spectra against various reference spectral databases. |

From Theory to Practice: A Guide to RT Alignment Algorithms and Software Tools

In liquid chromatography-high-resolution mass spectrometry (LC-HRMS) based proteomic and metabolomic experiments, retention time (RT) alignment is a critical preprocessing step for correlating identical components across different samples [10]. Variations in RT occur due to matrix effects, instrument performance, and changes in chromatographic conditions, making alignment essential for accurate comparative analysis [3]. Traditional warping methods, implemented in widely used open-source software like XCMS and MZmine, correct these RT shifts using mathematical models to align peaks across multiple runs [3] [22]. Within the broader context of HRMS data preprocessing research, these algorithms form the foundational approach for handling monotonic RT shifts, upon which newer, more complex methods are built.

Core Algorithms and Quantitative Comparison

The alignment algorithms in XCMS and MZmine operate on the principle of constructing a warping function that maps the retention times from one run to another. This function corrects for the observed shifts, ensuring that features from the same analyte are correctly grouped. The following table summarizes the core characteristics and algorithms of these two platforms.

Table 1: Core Algorithm Comparison between XCMS and MZmine

| Feature | XCMS | MZmine 2 |

|---|---|---|

| Primary Alignment Method | Obiwarp (non-linear alignment) [23] | Random Sample Consensus (RANSAC) [22] |

| Algorithm Type | Warping function-based [3] | Warping function-based [3] |

| Key Strength | High flexibility with numerous supported algorithms and parameters [23] | Robustness against outlier peaks due to the RANSAC algorithm [22] |

| Typical Input | Peak-picked feature lists from centroid or profile data [24] | Peak lists generated by its modular detection algorithms [25] |

| Handling of RT Shifts | Corrects monotonic shifts [3] | Corrects monotonic shifts [3] |

The performance of these traditional warping methods has been extensively evaluated. In a comparative study of untargeted data processing workflows, XCMS and MZmine demonstrated similar capabilities in detecting true features. Notably, some research recommends combining the outputs of MZmine 2 and XCMS to select the most reliable discriminating markers [26].

Experimental Protocols for Retention Time Alignment

Protocol for RT Alignment using XCMS

The following protocol outlines a standard workflow for peak picking and alignment in XCMS within the Galaxy environment [23].

Step 1: Data Preparation and Import

- Obtain raw LC-MS data files in an open format (e.g.,

mzML,mzXML). - Import files into a data analysis platform. In Galaxy, create a dataset collection containing all sample files to process them efficiently in parallel.

- Use the

MSnbase readMSDatatool to read the raw files and generateRDataobjects suitable for XCMS processing [23].

Step 2: Peak Picking

- Execute the

xcms findChromPeaksfunction. Select an appropriate algorithm based on data characteristics:- Massifquant: A Kalman filter (KF)-based chromatographic peak detection method for centroid mode data. Key parameters include

peakwidth(e.g., c(20, 50)),snthresh(signal-to-noise threshold, e.g., 10), andprefilter(e.g., c(3, 100)) [24]. - CentWave: Ideal for high-resolution data with a high sampling rate; effective for detecting peaks with a broader width [24].

- Massifquant: A Kalman filter (KF)-based chromatographic peak detection method for centroid mode data. Key parameters include

- The output is a peak list for each sample, containing columns for

mz,mzmin,mzmax,rtmin,rtmax,rt(retention time),into(integrated intensity), andmaxo(maximum intensity) [24].

Step 3: Retention Time Alignment with Obiwarp

- Apply the

xcms adjustRtimefunction with the Obiwarp method to perform nonlinear alignment. - This method calculates a warping function for each sample based on a chosen reference, correcting for monotonic RT drifts across the run set [23].

Step 4: Correspondence and Grouping

- Use the

xcms groupfunction to match peaks across samples by grouping features with similar m/z and aligned retention times. - Finally, fill in any missing peaks using a gap-filling algorithm to generate a complete feature table for statistical analysis [23].

Protocol for RT Alignment using MZmine

Step 1: Peak Detection and Peak List Building

- Process raw data files through MZmine's peak detection modules. The software supports multiple algorithms, including:

- Local Maxima: A simple method for well-defined spectra.

- Wavelet Transform: Suitable for noisy data, based on continuous wavelet transform matched to a "Mexican hat" model [22].

- The result is a peak list for each sample.

Step 2: Configuring the RANSAC Aligner

- Run the

Join Alignermodule, which utilizes the RANSAC algorithm. - The

RANSACParametersclass handles the user-configurable settings. Critical parameters include:mzTolerance: The maximum allowed m/z difference for two peaks to be considered a match.RTTolerance: The maximum allowed retention time difference before alignment.Iterations: The number of RANSAC iterations to perform.

- The

RANSACPeakAlignmentTaskclass contains the logic for executing the alignment [22].

Step 3: Executing the RANSAC Algorithm

- The algorithm works by iteratively selecting random subsets of potential peak matches to form a candidate warping model.

- It evaluates how many other peaks in the dataset are consistent with this model (the "consensus set").

- The model with the largest consensus set is chosen as the optimal warping function, making the alignment robust to outlier peaks that do not fit the general RT shift pattern [22].

Step 4: Review and Export

- Inspect the aligned peak list within MZmine's interactive table and visualization windows.

- Export the final, aligned feature table for downstream statistical analysis.

The logical flow of the RANSAC alignment process within MZmine's modular framework is illustrated below.

The Scientist's Toolkit: Essential Research Reagents and Software

Successful implementation of RT alignment protocols relies on a suite of software tools and computational resources. The following table details key components of the research toolkit.

Table 2: Essential Research Reagents and Software Solutions

| Tool/Resource | Function in RT Alignment Research | Source/Availability |

|---|---|---|

| XCMS R Package | Open-source software for peak picking, alignment, and statistical analysis of LC/MS data [23]. | Available via Bioconductor [23]. |

| MZmine 2 | Modular, open-source framework for processing, visualizing, and analyzing MS-based molecular profile data [22]. | Available from http://mzmine.sourceforge.net/ [22]. |

| Galaxy / W4M | Web-based platform providing a user-friendly interface for XCMS workflows, enabling tool use without advanced programming [23]. | Public instance at https://workflow4metabolomics.us/ [23]. |

| metabCombiner | An R package for matching features in disparately acquired LC-MS data sets, overcoming significant RT alterations [27]. | R package at https://github.com/hhabra/metabCombiner [27]. |

| DeepRTAlign | A deep learning-based tool demonstrating improved performance over traditional warping for complex monotonic/non-monotonic shifts [3]. | Method described in Nature Communications [3]. |

| PARSEC | A post-acquisition strategy for improving metabolomics data comparability across separate studies or batches [28]. | Method described in Analytica Chimica Acta [28]. |

Traditional warping methods, as implemented in XCMS and MZmine, provide robust and well-established solutions for the crucial data preprocessing step of RT alignment. While their core warping function approach is highly effective for correcting monotonic RT shifts, a key limitation is their inability to handle non-monotonic shifts [3]. The emergence of new computational strategies, including deep learning-based tools like DeepRTAlign and advanced post-acquisition correction workflows like PARSEC, points toward the future of alignment research [3] [28]. These next-generation methods aim to overcome the limitations of traditional algorithms, particularly for integrating and performing meta-analyses on large-scale cohort data acquired under disparate conditions, thereby enhancing the reproducibility and scalability of HRMS-based studies [27] [28].

Advanced Direct Matching and Multi-Dataset Alignment with metabCombiner

Liquid Chromatography–High-Resolution Mass Spectrometry (LC-HRMS) has become an indispensable analytical technique in untargeted metabolomics, enabling the simultaneous detection of thousands of small molecules in biological samples [29]. A fundamental challenge in processing this complex data involves feature alignment, a computational process where LC-MS features derived from common ions across multiple samples or datasets are assembled into a unified data matrix suitable for statistical analysis [29] [30]. This alignment process is crucial for comparative analysis but is significantly complicated by analytical variability introduced when data is acquired across different laboratories, generated using non-identical instruments, or collected in multiple batches of large-scale studies [29]. Such variability manifests as retention time (RT) shifts that can be substantial (up to several minutes) and cannot be adequately corrected using conventional alignment approaches [29].

Several computational strategies have been developed to address the LC-MS alignment problem. Traditional methods can be broadly categorized into warping function approaches (e.g., XCMS, MZmine 2, OpenMS), which correct RT shifts using linear or non-linear warping functions but struggle with non-monotonic shifts, and direct matching methods (e.g., RTAlign, MassUntangler, Peakmatch), which perform correspondence based on signal similarity without a warping function but often exhibit inferior performance due to MS signal uncertainty [3]. More recently, deep learning approaches such as DeepRTAlign have emerged, combining pseudo warping functions with deep neural networks to handle both monotonic and non-monotonic RT shifts [3]. Additionally, optimal transport methods like GromovMatcher leverage correlation structures between feature intensities and advanced mathematical frameworks to align datasets [31]. Within this evolving landscape, metabCombiner occupies a unique position as a robust solution specifically designed for aligning disparately acquired LC-MS metabolomics datasets through a direct matching framework with retention time mapping capabilities [29].

metabCombiner Technical Framework

Core Algorithmic Architecture

metabCombiner employs a stepwise alignment workflow that enables the integration of multiple untargeted LC-MS metabolomics datasets through a cyclical process consisting of six distinct phases [29]. The software introduces a multi-dataset representation class called the "metabCombiner object," which serves as the main framework for executing the package workflow steps [29]. This object maintains two closely linked report tables: a combined table containing possible feature pair alignments (FPAs) with their associated per-sample abundances and alignment scores, and a feature data table that organizes aligned features and their descriptors by constituent dataset of origin [29].

A key innovation in metabCombiner 2.0 is its use of a template-based matching strategy, where one input object is designated as the projection ("X") feature list and the other serves as the reference ("Y") [29]. In this framework, a "primary" feature list acts as a template for matching compounds in "target" feature lists, facilitating inter-laboratory reproducibility studies [29]. The algorithm constructs a combined table showing possible FPAs arranged into m/z-based groups, constrained by a 'binGap' parameter [29]. For each feature pair, the table includes a 'score' column representing calculated similarity, rankX and rankY ordering alignment scores by individual features, and "rtProj" showing the mapping of retention times from the projection set to the reference [29].

Workflow and Process Diagram

The metabCombiner alignment process follows a structured, cyclical workflow consisting of six method steps that transform raw feature tables into aligned datasets [29]. The following diagram illustrates this comprehensive process:

Quantitative Similarity Scoring System

The similarity scoring system in metabCombiner represents a sophisticated computational approach that evaluates potential feature matches across multiple dimensions. The calcScores() function computes a similarity score between 0 and 1 for all grouped feature pairs using an exponential penalty function that accounts for differences in m/z, retention time (comparing model-projected RTy versus observed RTy), and quantile abundance (Q) [29]. This multi-parameter approach ensures that the highest scores are assigned to feature pairs with minimal differences across all three critical dimensions.

Following score calculation, pairwise score ranks (rankX and rankY) are computed for each unique feature with respect to their complements [29]. The most plausible matches are ranked first (rankX = 1 and rankY = 1) and typically score close to 1, providing a straightforward mechanism for identifying high-confidence alignments [29]. The algorithm also incorporates a conflict resolution system that identifies and resolves competing alignment hypotheses, particularly for closely eluting isomers, by selecting the combination of feature pair alignments within each subgroup with the highest sum of scores [29].

Comparative Analysis of Alignment Methodologies

Feature Comparison with Alternative Approaches

Table 1: Comparative analysis of LC-MS alignment methodologies

| Method | Algorithm Type | RT Correction Approach | Multi-Dataset Capability | Strengths | Limitations |

|---|---|---|---|---|---|

| metabCombiner | Direct matching with warping | Penalized basis spline (GAM) | Yes (stepwise) | Handles disparate datasets; maintains non-matched features; requires no identified peptides | Limited functionality for >3 tables in initial version |

| DeepRTAlign [3] | Deep learning | Coarse alignment + DNN refinement | Limited | Handles monotonic and non-monotonic shifts; improved identification sensitivity | Requires significant training data; computational complexity |

| GromovMatcher [31] | Optimal transport | Nonlinear map via weighted spline regression | Yes | Uses correlation structures; robust to data variations; minimal parameter tuning | Limited validation with non-curated datasets |

| ROIMCR [15] [32] | Multivariate curve resolution | Not required (direct component analysis) | Yes | Processes positive/negative data simultaneously; reduces dimensionality | Lower treatment sensitivity; different conceptual approach |

| Traditional Warping (XCMS, MZmine) [3] | Warping function | Linear/non-linear warping | Limited | Established methodology; extensive community use | Cannot correct non-monotonic shifts; struggles with disparate data |

Performance Benchmarking

When evaluated on experimental data, metabCombiner has demonstrated robust performance in challenging alignment scenarios. In an inter-laboratory lipidomics study involving four core laboratories using different in-house LC-MS instrumentation and methods, metabCombiner successfully aligned datasets despite significant analytical variability [29] [30]. The method's template-based approach allowed for the stepwise integration of multiple datasets, facilitating reproducibility assessments across participating institutions [29].

Comparative benchmarking studies have revealed that alignment tools exhibit significantly different characteristics in practical applications. While feature profiling methods like MZmine3 show increased sensitivity to treatment effects, they also demonstrate increased susceptibility to false positives [32]. Conversely, component-based approaches like ROIMCR provide superior consistency and reproducibility but may exhibit lower treatment sensitivity [32]. These findings highlight the importance of selecting alignment methodologies appropriate for specific research objectives and data characteristics.

Experimental Protocols

Multi-Dataset Alignment Procedure

Protocol 1: Stepwise Alignment of Disparate LC-MS Datasets

This protocol describes the procedure for aligning multiple disparately acquired LC-MS metabolomics datasets using metabCombiner 2.0, demonstrated through an inter-laboratory lipidomics study with four participating core laboratories [29].

Input Data Preparation

- Process raw LC-MS data from each laboratory using feature detection software (XCMS, MZmine, or MS-DIAL) to generate feature tables [29]

- Format all feature tables as

metabDataobjects using themetabData()constructor function - Apply filters to exclude features based on proportion missingness and retention time ranges

- Merge duplicate features representing the same compound into single representative rows [29]

metabCombiner Object Construction

- Construct a

metabCombinerobject from two single datasets, a single and combined dataset, or two combined dataset objects - Designate one input object as the projection ("X") feature list and the other as the reference ("Y")

- Assign unique identifiers to each dataset for tracking throughout the alignment process [29]

- Construct a

Retention Time Mapping and Alignment

- Execute the

selectAnchors()function to choose feature pairs among highly abundant compounds for modeling RT warping - Run

fit_gam()to compute a penalized basis spline model for RT mapping using selected anchors - Constrain the RT mapping to appropriate ranges by removing empty head or tail chromatographic regions [29]

- Perform

calcScores()to compute similarity scores (0-1) for all grouped feature pairs

- Execute the

Feature Table Reduction and Annotation

- Apply

reduceTable()to assign one-to-one correspondence between feature pairs using calculated alignment scores and ranks - Implement thresholding for alignment scores, pairwise ranks, and RT prediction errors to exclude over 90% of mismatches

- Resolve conflicting alignment possibilities using the integrated competitive hypothesis testing [29]

- Apply

Multi-Dataset Integration

- Utilize

updateTables()to restore features from original inputs lacking complementary matches - Repeat the alignment cycle to incorporate additional single or combined datasets

- Export the final aligned feature matrix containing matched features and their abundances across all datasets [29]

- Utilize

Batch Alignment for Large-Scale Studies

Protocol 2: batchCombine for Multi-Batch Experiments

This protocol outlines the application of the metabCombiner framework for aligning experiments composed of multiple batches, serving as an alternative to processing large datasets in single batches [29].

Batch Data Organization

- Organize feature tables by batch, ensuring consistent formatting across all batches

- Designate a primary batch with highest data quality to serve as the alignment template

Sequential Batch Processing

- Align the primary batch with the first secondary batch using the standard metabCombiner workflow

- Use the resulting combined dataset as the reference for subsequent batch alignments

- Iterate through all batches until complete dataset integration is achieved [29]

Quality Assessment and Validation

- Examine the distribution of alignment scores across batches to identify potential issues

- Verify retention time mapping consistency across all integrated batches

- Assess the proportion of features successfully matched versus those carried forward as unique to specific batches

Research Toolkit for LC-MS Alignment

Table 2: Essential research reagents and computational tools for LC-MS alignment studies

| Category | Item/Software | Specifications | Application Function |

|---|---|---|---|

| Software Packages | metabCombiner | R package (Bioconductor), R Shiny App | Primary alignment tool for disparate datasets |

| XCMS [29] | Open-source R package | Feature detection and initial processing | |

| MZmine [29] | Java-based platform | Alternative feature detection and processing | |

| MS-DIAL [29] | Comprehensive platform | Data processing and preliminary alignment | |

| Data Objects | metabData object | Formatted feature table (m/z, RT, abundance) | Single dataset representation class |

| metabCombiner object | Multi-dataset representation | Main framework for executing alignment workflow | |

| Instrumentation | LC-HRMS Systems | Various vendors (Thermo, Waters, etc.) | Raw data generation with high mass accuracy |

| Reference Materials | Quality Control Samples | Matrix-matched with study samples | Monitoring instrument performance and alignment quality |

Advanced Applications and Implementation

Inter-Laboratory Reproducibility Assessment

The enhanced multi-dataset alignment capability of metabCombiner 2.0 enables systematic reproducibility assessments across laboratories and analytical platforms. In the demonstrated inter-laboratory lipidomics study, the algorithm successfully aligned datasets from four core laboratories generated using each institution's in-house LC-MS instrumentation and methods [29]. This application highlights metabCombiner's utility in addressing the significant challenges to data interoperability that persist despite efforts to standardize protocols in the metabolomics field [29].

For implementation of inter-laboratory studies, researchers should designate a reference dataset with the highest data quality or most comprehensive feature detection to serve as the primary alignment template. Subsequent laboratory datasets can then be sequentially aligned to this reference, with careful documentation of alignment quality metrics for each pairwise combination. This approach facilitates the identification of systematic biases and platform-specific sensitivities that may impact cross-study comparisons and meta-analyses.

Integration with Downstream Bioinformatics Workflows

Aligned feature matrices generated by metabCombiner serve as critical inputs for subsequent metabolomic data analysis steps. The unified data structure enables reliable comparative statistics to identify differentially abundant metabolites across experimental conditions, datasets, or laboratories. Additionally, the aligned features can be integrated with pathway analysis tools to elucidate altered metabolic pathways in biological studies.

For the ELEMENT (Early Life Exposures in Mexico to Environmental Toxicants) cohort study, which involved multi-batch untargeted LC-MS metabolomics analyses of fasting blood serum from Mexican adolescents, the batchCombine application of metabCombiner provided an effective solution for handling the significant chromatographic drift encountered between batches in large-scale studies [29]. This demonstrates the method's utility in epidemiological applications where data collection necessarily spans extended periods and multiple analytical batches.

Liquid chromatography-mass spectrometry (LC-MS) is a cornerstone technique in proteomics and metabolomics, enabling the separation, identification, and quantification of thousands of analytes in complex biological samples. However, a persistent challenge in experiments involving multiple samples is the shift in analyte retention time (RT) across different LC-MS runs. These shifts, caused by factors such as matrix effects and instrumental performance variations, complicate the correspondence process—the critical task of matching the same compound across multiple samples [3] [33]. In large cohort studies, which are essential for robust biomarker discovery and systems biology, accurate alignment becomes a major bottleneck [34].

Traditional computational strategies for RT alignment fall into two main categories. The warping function method (used by tools like XCMS, MZmine 2, and OpenMS) corrects RT shifts using a linear or non-linear warping function. A key limitation of this approach is its inherent inability to handle non-monotonic RT shifts because the warping function itself is monotonic [3] [33]. The direct matching method (exemplified by tools like RTAlign and MassUntangler) attempts correspondence based on signal similarity without a warping function but often underperforms due to the uncertainty of MS signals [3]. Consequently, existing tools struggle with complex RT shifts commonly found in large-scale clinical datasets. DeepRTAlign was developed to overcome these limitations by integrating a robust coarse alignment with a deep learning-based direct matching strategy, proving effective for both monotonic and non-monotonic shifts [3] [34].

DeepRTAlign: Methodology and Workflow

DeepRTAlign employs a hybrid workflow that synergizes a traditional coarse alignment with an advanced deep neural network (DNN). The entire process is divided into a training phase (which produces a reusable model) and an application phase (which uses the model to align new datasets) [3].

Detailed Workflow and Input Vector Construction

The workflow begins with precursor detection and feature extraction. While DeepRTAlign uses an in-house tool called XICFinder for this purpose, it is highly flexible and supports feature lists from other popular tools like Dinosaur, OpenMS, and MaxQuant, requiring only simple text or CSV files containing m/z, charge, RT, and intensity information [3] [35].

Next, a coarse alignment is performed to handle large-scale monotonic shifts. The retention times in all samples are first linearly scaled to a common range (e.g., 80 minutes). An anchor sample (typically the first sample) is selected, and all other samples are divided into fixed RT windows (e.g., 1 minute). For each window, features are compared to the anchor sample within a small m/z tolerance (e.g., 0.01 Da). The average RT shift for matched features within the window is calculated, and this average shift is applied to all features in that window to coarsely align it with the anchor [3].

After coarse alignment, features are binned and filtered. Binning groups features based on their m/z values within a user-defined window (bin_width, default 0.03) and precision (bin_precision, default 2 decimal places). This step ensures that only features with similar m/z are considered for alignment, drastically reducing computational complexity. An optional filtering step can retain only the most intense feature within a specified RT range for each sample in each m/z bin [3] [35].

A critical innovation of DeepRTAlign is its input vector construction. Inspired by word embedding methods in natural language processing, the model considers the contextual neighborhood of a feature. For a target feature pair from two samples, the input vector incorporates the RT and m/z of the two target features, plus the two adjacent features (before and after) in each sample based on RT. This creates a comprehensive vector that includes both original values and difference values between the samples, which are then normalized using base vectors ([5, 0.03] for differences and [80, 1500] for original values). The final input to the DNN is a 5x8 vector that richly represents the feature and its local context [3] [34].

Deep Neural Network Architecture and Training

The core of DeepRTAlign is a deep neural network with three hidden layers, each containing 5000 neurons [3]. The network functions as a binary classifier, determining whether a pair of features from two different samples should be aligned (positive class) or not (negative class).

- Training Data: The model is trained on a large set of 400,000 feature-feature pairs. Half of these are positive pairs, derived from the same peptides based on identification results (from search engines like Mascot), and the other half are negative pairs, collected from different peptides but with a small m/z tolerance (0.03 Da) [3].

- Hyperparameters: The model uses the BCELoss loss function from PyTorch and the sigmoid activation function. Optimization is performed using the Adam optimizer with an initial learning rate of 0.001, which is reduced by a factor of 10 every 100 epochs. Training runs for 400 epochs with a batch size of 500 [3].

- Quality Control: A crucial feature of DeepRTAlign is its built-in quality control module, which estimates the false discovery rate (FDR) of the alignment results. For each m/z window, a decoy sample is randomly created. Since features in this decoy should not genuinely align, the rate at which they are incorrectly aligned provides an FDR estimate, allowing users to filter results with a desired confidence level (e.g., FDR < 1%) [3].

The following diagram illustrates the complete DeepRTAlign workflow, from raw data input to the final aligned feature list.

Performance Benchmarking and Quantitative Evaluation

DeepRTAlign has been rigorously benchmarked against state-of-the-art tools like MZmine 2 and OpenMS across multiple real-world and simulated proteomic and metabolomic datasets [3] [33]. The performance is typically evaluated using precision (the fraction of correctly aligned features among all aligned features) and recall (the fraction of true corresponding features that are successfully aligned) [33].

Performance on Diverse Datasets

The following table summarizes the documented performance advantages of DeepRTAlign over existing methods on various test datasets.

Table 1: Performance Benchmarking of DeepRTAlign Across Diverse Datasets

| Dataset Name | Sample Numbers | Key Finding | Performance Improvement | Reference |

|---|---|---|---|---|

| HCC (Liver Cancer) | 101 Tumor + 101 Non-Tumor | Improved biomarker discovery classifier | AUC of 0.995 for recurrence prediction | [34] [33] |

| Single-Cell DIA | Not Specified | Increased peptide identifications | 298 more peptides aligned per cell vs. DIA-NN | [33] |

| Multiple Test Sets | 6 Datasets | Average performance increase | ~7% higher precision, ~20% higher recall | [33] |

| UPS2-Y / UPS2-M | 12 per set | Handles complex samples better | Outperformed MZmine 2 & OpenMS | [3] |

Comparison with Machine Learning Models

Beyond traditional tools, DeepRTAlign's DNN has been compared against other machine learning classifiers, including Random Forests (RF), k-Nearest Neighbors (KNN), Support Vector Machine (SVM), and Logistic Regression (LR). After parameter optimization, the DNN consistently demonstrated superior performance, confirming that the depth and architecture of the neural network are well-suited for this complex matching task [33].

Application Notes and Experimental Protocols

This section provides a detailed, step-by-step protocol for applying DeepRTAlign to a typical large-cohort LC-MS dataset, enabling researchers to replicate and implement this method successfully.

Protocol: Aligning a Large-Cohort LC-MS Dataset using DeepRTAlign

Objective: To accurately align LC-MS features across multiple samples in a large cohort study using DeepRTAlign, enabling downstream comparative analysis.

I. Prerequisite Software and Data Preparation

- Install DeepRTAlign: Install the tool using pip with the command

pip install deeprtalign[35]. The software is compatible with Windows 10, Ubuntu 18.04, and macOS 12.1. - Input Data Preparation:

- Feature Lists: Generate feature lists for each sample in your cohort using a supported feature extraction tool (e.g., Dinosaur, OpenMS, MaxQuant, XICFinder). Alternatively, prepare a custom text (TXT) or comma-separated value (CSV) file containing the following columns for each feature: m/z, charge, retention time (RT), and intensity [35].